Introduction

Collecting attendance records in classroom settings is one of the most regularly utilized strategies in many colleges to enhance students’ academic performance. There is a considerable correlation between student presence and educational success; low participation is typically associated with poor accomplishment [1]. An appropriate strategy for validating and monitoring attendance data is essential to ensure the accuracy of a student’s attendance record. The typical method of collecting attendance is to distribute an attendance sheet around the classroom and have pupils sign their names. Another frequent method is headcount, in which the lecturer registers presence by calling each student’s name. One advantage of these manual attendance-taking systems is that no specific setting or technology is required. These manual procedures, however, have clear drawbacks [2], [3].

The traditional student attendance system has been known to be a time-consuming, not accurate, and hard process to follow. It, therefore, becomes imperative to eradicate or minimize the deficiencies identified in the archaic method. These tactics not only squander important class time but also risk incorporating impostors. Also, the format of class attendance numbers is difficult to handle and easily lost if not attentive. Recently, a variety of facial recognition-based algorithms for managing attendance automatically have been created, including local, and hybrid methods that describe a face image using just a few of its properties or the whole thing [4]–[7].

In the realm of computer vision, face recognition is one of the most extensively used biometric identification technologies [8]. Face recognition-based attendance systems typically comprise picture collecting, dataset creation, face detection, and face recognition. A face, unlike a fingerprint, may be easily identified by a human. Human face recognition systems have become a significant element in automated participation systems due to their ease of acquisition and dependable and polite interactions [9]–[11].

Convolution Neural Network (CNN) has dramatically altered the area of face recognition since it can autonomously locate the features required for classification during the training phase without the requirement for feature extraction stages [12]. Nevertheless, while building CNN architectures, various difficulties arise, such as high computing costs for input processing and determining the best CNN features (model) for each task [13]–[17]. Thus this study aims to enhance CNN’s performance using Genetic Algorithm (GA) by reducing the computational expenses for data processing and determining the best CNN features (structure) for a face-based attendance system.

The purpose of this research is to solve the problems that traditional university attendance systems encounter. Traditional attendance methods are time-consuming and prone to mistakes, resulting in erroneous attendance records. Moreover, manual attendance methods are unsuitable for big classrooms, and it was difficult to keep track of students who may attempt to trick the system via proxy attendance or faking attendance records. Face recognition technology-based automatic attendance systems can circumvent these restrictions. A system like this may record attendance reliably and rapidly, minimize fraud, and remove the need for human data entry. The accuracy of facial recognition systems, on the other hand, was highly reliant on the quality of the input data and the algorithm employed for recognition. As a result, an upgraded CNN can assist increase the accuracy and efficiency of the attendance monitoring system.

The improved CNN may be used to overcome typical problems in face recognition systems such as occlusion, lighting fluctuations, and position variations. This technique may be used with other technologies, such as machine learning, to enhance recognition accuracy and decrease false positives. As a result, this has the potential to deliver an efficient, precise, and dependable attendance monitoring system that may save time, eliminate mistakes, and promote student responsibility.

Related Work

Pandiselvi et al., [7] introduced an RFID-based smart class attendance monitoring system with human identification for absentees. The study is based on a small and dependable classroom attendance system employing an RFID card and facial recognition technology. It just utilized MATLAB progress to validate each student’s face. Then, in the task, an individual buzzer is employed. It identifies proxy attendance and stores it in a log file. The task then observes the absentees list and sends the message to the appropriate or specified number. RFID and facial verification are used to utilize and take attendance in this system, which has a 98% efficiency.

Ikhar et al., [10] illustrate how an automated attendance system works in a classroom setting. There are various ways to face recognition, the most well-known of which is Principle Component Analysis (PCA). It is suggested to use Principle Component Analysis to create an automated attendance system that utilizes facial recognition. Recognition of facial images Eigenface is a method for reducing face dimensional space by utilizing PCA for facial characteristics. The system is made up of a dataset including a set of face patterns for each individual. This unique technology is sufficiently safe, dependable, and widely available for usage in a variety of applications such as safety, industrial, education, facial detection, and so on.

Pei et al., [3] proposed a Deep Learning (DL) Face Recognition Using Data Augmentation Based on Orthogonal Experiments. The data augmentation methodology was introduced as a viable solution to overcome the issue of over-fitting CNN architectures due to inadequate samples. The main idea behind data augmentation is to produce virtual samples in order to enhance the size of the training dataset while decreasing over-fitting. To expand the training samples, geometric transformation changes in picture brightness, and operations employing various filter techniques are used in this research. Moreover, they use the orthogonal experimental approach to examine the influence of the aforementioned processing techniques on the accuracy of facial recognition software. The original test images are then supplemented with the best data augmentation methodology based on the orthogonal experiment results. Our class attendance-taking approach with data augmentation yields an accuracy of 86.3%, with more data gathered over a term improving the accuracy to 98.1% [3].

Amit et al., [11] proposed an Automated Attendance System Using Facial Recognition. This study uses the face recognition technique using employee records for marking attendance. In the proposed system application is active during the working hours of the organization. The camera of the system (Computer application) will be fitted at the entrance as such to scan the faces of anyone who enters the office, the application captures the image and sends it to the processing side. The processing part of the application recognizes the face of the employee. Finally, the application marks the employee if he/she is present. If an employee is not recognized by the application i.e., he/she is not present, he/she is marked as absent for the day. The proposed system requires less hardware support also the processing time is also less in comparison to other conventional systems of signing papers, Radio Frequency Identification [RFID], and biometrics which proves this system to be more efficient for organizations to use in real-time application [8].

Baheti et. al., [18] developed an Automatic Attendance System Using the DL-based CNN technique for Face Recognition. They began by photographing pupils in a classroom and then used the OpenCV module to recognize and frame the faces in the picture. The frames are then enhanced using an image-improving technique in the next phase. In the last step of deployment, they develop a CNN to train these face photos and match them with the student information stored in the database, updating the students’ participation level.

Ahmed et. al., [19] created a real-time attendance system for pupils for use in small-scale contexts like classrooms. Three separate strategies were employed to do this, including facial recognition, identity, and numbering. The Histogram of Oriented Gradients Technique is used to recognize faces. A CNN was utilized to extract facial characteristics for face recognition. To recognize individual faces in the picture, a Support Vector Machine was utilized. Lastly, during the counting stage, a Haar cascade classifier was employed to count the number of faces in the picture. As a consequence, the created system identified faces in the collected image with a classification accuracy of 99.75%. The suggested method is an ideal answer for replacing the previous manual technique with an autonomous one.

Joseph, et al., [20] introduced a simultaneous facial detection monitoring system based on a field of intelligent machines known as CNN and OpenCV. The research findings demonstrated the efficiency of the suggested approach, with classification results of around 98%, a precision of 96%, and a recall of 0.96. As a result, the suggested approach is a viable face recognition system. Most face recognition technologies have difficulties detecting faces in settings such as sound, position, facial expression, lighting, blockage, and low-performance correctness.

Filippidou et al., [21] concentrated on the example of a single sample face identification problem, with the goal of developing a real-time visual-based awareness solution. Five well-known pre-trained CNNs were tested in this regard. The study findings indicate that DenseNet121 was the best choice for interacting with active learning strategies (up to 99% top-l accuracy), as well as the best and most powerful concept for interacting with the single data point per individual challenge, which is connected to using deep CNNs on a limited data and, more explicitly, the mono sample per individual facial recognition software task.

Sawhney et. al., [22] offered a methodology for building an autonomous system to track attendance for pupils taking a class utilizing Eigenface values, PCA, and CNN. Furthermore, the association of identified faces should be possible by matching them to the dataset held student photographs. This strategy will be an effective method for managing student attendance and documentation. Dang [23] research employed an upgraded FaceNet model architecture based on a MobileNetV2 backbone with an SSD component. Deep-wise segmented convolution was used in the enhanced framework to minimize model size and computing complexity while maintaining excellent accuracy and processing performance. To tackle the challenge of detecting a person entering and departing an area and merging highly mobile resources with limitations on sophisticated mobile devices. The technique particularly produced good outcomes in practice, with more than 95% correctness on a small sample of actual face photos.

| Author | Method | Result | Limitations |

|---|---|---|---|

| [7] | RFID-based smart class attendance | The study achieved an efficiency of 98% | Small sample size, absentee identification limitation with limited validation. |

| [10] | Eigenface method with PCA | The system is secure enough, reliable, and available for use for various applications. | Lack of robust tracking system. |

| [3] | DL using Data Augmentation Based on Orthogonal Experiments | The study achieve an accuracy of 86.3% with data augmentation | Overfitting of training dataset |

| [11] | RFID, biometrics | The study achieved lesser processing time to capture the attendance. | Human error in fitting the camera to capture faces |

| [18] | DL CNNs | The train facial images are compared with the database image for capturing attendance | The capturing of attendance was not real-time |

| [19] | CNN, SVM, Haar. | The system automatically captures student attendance and obtains an accuracy of 99.75%. | Limited dataset used for the classification |

| [20] | CNN, OpenCV | The results showed a precision of 96% and recall of 0.96 | Difficulties in detecting faces |

| [21] | CNN | The study indicate that DenseNet121 performance better for attendance capturing | Limited data with single testing sample |

| [22] | Eigenface value, PCA, CNN | It captures attendance effectively | Matching problem |

| [23] | FaceNet on MObileNetV2 | The study achieved an accuracy of 95% | Complexity problem |

According to the various reviewed studies, it was discovered that the accuracy achieved by those studies for attendance systems was insufficient for a reliable system in real-time student identification. Therefore, it was important to solve the prevalent problem with recognition accuracy. The research presented a solution that could handle the problems mentioned in the existing study. The research used CNN deep features and SVM for real-time student face identifications to automatically mark attendance, saving time and reliability. The summary of the review of the existing works is presented in Table 1.

Methodology

Data Acquisition

Face images were acquired via digital camera from students of Ajayi University, Oyo, Nigeria. A digital camera with at least 5 megapixels and above which makes a sharp 8-by-10-inch print was used. The captured image size of 1600 by 1200 resolution was downsized to 128 by 128-pixel resolution. Sixty percent of the images were used for training and forty percent were used for testing the system using the random sampling cross-validation method.

Data Pre-processing

An image is made up of a collection of square pixels (picture components) arranged in columns and rows. This was done to remove noise from the face images.

Conversion to Grayscale

An image is made up of a collection of square pixels (picture components) arranged in columns and rows. Each picture element in a (8-bit) grayscale image has a corresponding intensity value that falls between 0 and 255. The intensity of the pixel is represented by these values, where 255 denotes white pixels and 0 denotes black pixels. Each character’s sample picture size varies, however for the sake of this study, a fixed size of 28 by 28 was used.

Image Normalization

The histogram equalization was applied as image normalization in which the intensity of the image was distributed using a cumulative distribution function. The intensity levels of the photos were distributed using the cumulative distribution function. The mapping between the input picture grayscale values and the transformed image grayscale values was determined by the cumulative distribution function. Histogram equalization was used for enhancement contrast.

Thinning

The thinning algorithm is a morphological technique that is applied to binary images to remove certain foreground pixels.

Data Augmentation

Technique for data augmentation such are image scaling, image rotation, and image shearing was employed in this study.

Feature Extraction and Classification Phase Using Deep Learning Approach

With no requirement for manual feature selection, DL algorithms may be able to learn tasks directly from data. DL is a method for comprehending data, such as photographs, by learning several levels of representation and abstraction. End-to-end learning is used in DL for feature extraction and categorization. After obtaining a preprocessed face image after extracting features for each modality, classification was performed using a DL method that combines an optimization tool GA and a CNN.

In this study, convolutional layers and subsampling max-pooling were combined to build the proposed CNN. Two classification layers that were entirely connected make up the upper layers of the planned CNN. The Softmax classifiers then generated a likelihood function across the N class labels using the outputs of the last fully linked layer.

Training and Classification

The datasets were used to generate sets for training and testing. The test set was used to monitor the network’s generalization capacity during the learning phase and to record the weights configurations that were used to perform best with the least amount of validation error. The procedural steps followed to achieve the training and classification of modalities are as follows:

Step 1: Generate random population of \(N\), weight space \(\omega = [\omega_1, \omega_2, \dots, \omega_n]\), set parameter crossover probability \(pc\), mutation probability \(pm\).

Step 2: Forward pass: output of neuron of row \(k\), column y in the \(l\)th convolution layer and \(k\)th feature pattern among them, \(f\) is the number of convolution cores in a feature pattern, the output of neuron of row \(x\), column \(y\) in the \(l\)th sub-sample layer and \(k\)th feature pattern, the output of the \(j\)th neuron in \(l\)th hidden layer \(H\), among them, \(s\) is the number of feature patterns in sample layer, output of the \(i\)th neuron \(l\)th output layer \(F\).

Step 3: Backpropagation: output deviation of the \(k\)th neuron in output layer \(O\).

Step 4: Input deviation of the \(k\)th neuron in the output layer.

Step 5: Weight and bias variation of \(k\)th neuron in the output \(O\).

Step 6: Output bias of \(k\)th neuron in hidden layer \(H\).

Step 7: Input bias of \(k\)th neuron in hidden layer \(H\).

Step 8: Weight and bias variation in row \(x\), column \(y\) in the \(m\)th feature pattern, a former layer in front of \(k\) neurons in hidden layer \(H\).

Step 9: Output bias of row \(x\), column \(y\) in \(m\)th feature pattern, sub-sample layer \(S\).

Step 10: Input bias of row \(x\), column \(y\) in \(m\)th feature pattern, sub-sample layer \(S\).

Step 11: Weight and bias variation of row \(x\), column \(y\) in \(m\)th feature pattern, sub-sample layer \(S\).

Step 12: Output bias of row \(x\), column \(y\) in \(k\)th feature pattern, convolution layer \(C\).

Step 13: Input bias of row \(x\), column \(y\) in \(k\)th feature patter, convolution layer \(C\).

Step 14: Evaluate Objective Function based on initial optimal weight features.

Step 15: Perform the following operations:

Selection {as selection pressure}.

Recombination {as the Pc used for selection of the features}.

Mutation {as the Pm used for selection of the features}.

Step 16: Generate new selected fused features with optimal weight \(\omega\).

Step 17: GOTO Step 3 until maximum iteration is reached.

Step 18: Output selected fused features with optimal weight \(\omega\) based on best fitness value.

Steps 2-14 are the procedural steps involved in standard CNN. Steps 15-18 are introduced to modify and optimize the weight of CNN for performance improvement.

Evaluation Measures

The performance of the CNN tuned with GA (CNN-GA) was done using recognition accuracy, False Rejection Rate (FRR), computation time, and False Acceptance Rate (FAR).

Results

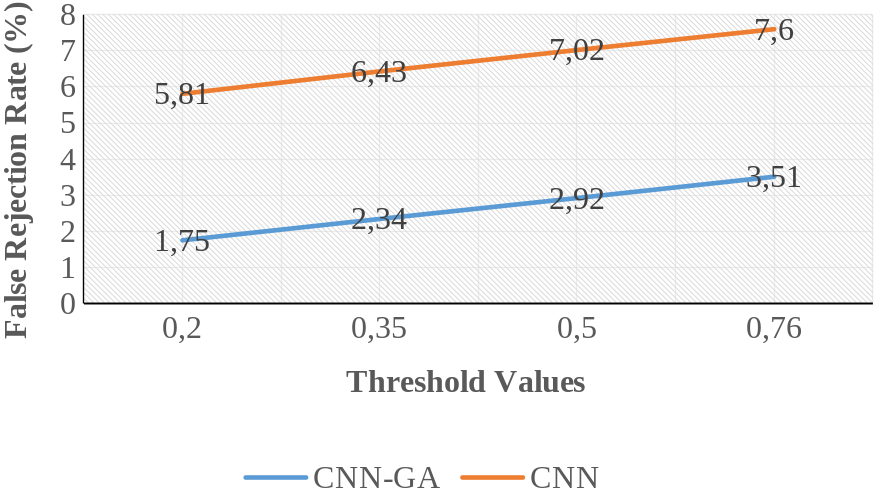

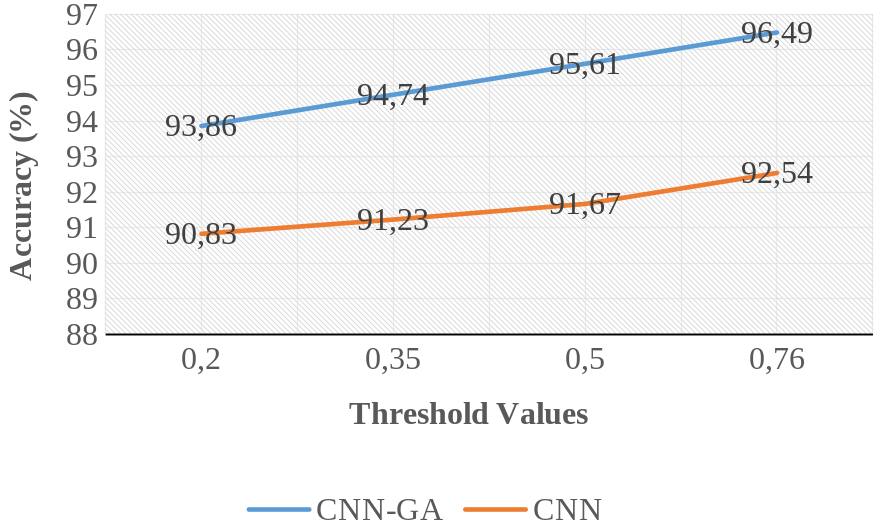

Table 2 shows the combined outcome of CNN and CNN-GA for all matrices at a resolution of 128 by 128 pixels, with a threshold value of 0.76. Regardless of the threshold value, the results show that the CNN-GA model has the shortest recognition time when compared to the CNN model. Comparing the sensitivity, false positive rate, recognition accuracy, and specificity of the CNN and CNN-GA models at 128 by 128-dimensional sizes, the study found that the CNN-GA model performed better than the CNN model in terms of accuracy, specificity, and false-positive rate as shown in Figures 1 and 2.

| Algorithm | FAR (%) | FRR (%) | Accuracy (%) | Time(sec) |

|---|---|---|---|---|

| CNN-GA | 3.51 | 3.51 | 96.49 | 76.25 |

| CNN | 7.02 | 7.60 | 92.54 | 97.01 |

The improved face recognition accuracies with CNN-GA got 96.49% while the face recognition accuracies with CNN got 92.54%. It can be inferred from the results based on the performance metrics that CNN-GA applied with face gave the better result. Recognition accuracies coupled with the false acceptance rate generated with the face using CNN-GA at 0.76 threshold values were as follows: Face had 96.49% accuracy at 3.51% False Acceptance Rate (FAR). While with CNN at 0.76 threshold values are as follows: Face had 92.54% accuracy at 7.02% FAR, respectively.

Finally, the aforementioned results were determined based on the optimum threshold value which happened to be selected because of its outstanding performance compared to other threshold values. In view of the above results, the face with CNN-GA gave more accuracy due to a high number of true positives as well as a low number of true negatives leading to high accuracy.

Conclusion and Recommendation

Due to its great accuracy, a face-based attendance system would result in a more trustworthy, accurate, and secure attendance system on any repository system. In other words, the developed CNN-GA technique has ensured good classification performance and robustness against various attacks with optimal computational efficiency in terms of its accuracy and time. This study has contributed to knowledge by developing an improved attendance system that was able to produce a robust monitoring system. The results obtained show that faces with CNN-GA gave more accuracy due to a high number of true positives as well as a low number of true negatives leading to high accuracy.

Authors’ Information

Olufemi S. Ojo is currently a Lecturer at the Department of Computer Sciences, Ajayi Crowther University Oyo town in Nigeria, he has a Ph.D. in Computer Science from Ladoke Akintola University of Technology, Ogbomoso. Nigeria. His research focuses on Pattern Recognition, Optimization Techniques, and E-learning. He is a full member of the International Association of Engineers (IAEng) and Computer Professionals of Nigeria (CPN).

Mayowa O. Oyediran is a Senior Lecturer in the Department of Computer Engineering at Ajayi Crowther University, Oyo town in Nigeria, he has a Ph.D. in Computer Engineering from Ladoke Akintola University of Technology, Ogbomoso. Nigeria. He is specialized in Distributed Systems and Applications with a focus on Cloud computing, fog computing, MANETs, etc.

Babatunde J. Bamgbade is a Lecturer and Chief Program Analyst at both the Federal College of Forestry/Forestry Research Institute of Nigeria and the National Open University of Nigeria (NOUN). He has the following Academic Qualifications: B. Sc, M.Sc, MCS (Professional), and PGD all in Computer Science from the University of Ibadan, JABU, and Ambrose Alli University, respectively. He has several publications from Referred and reputable Journals. His areas of specialization in Research are: Machine Learning and, Software Development.

Emmanuel A. Adeniyi is currently a lecturer and researcher in the Department of Computer Sciences at Precious Cornerstone University, Ibadan, Nigeria. His area of research interest is information security, the computational complexity of algorithms, the Internet of Things, and machine learning. He has published quite a number of research articles in reputable journal outlets.

Godwin Nse Ebong is an accomplished professional passionate about technology and innovation. He holds a Masters’s degree in Data Science from the University of Salford and a Bachelor of Science in Engineering from Caritas University. He is an expert in cloud engineering, data engineering, and data analysis, with a proven track record of developing data-driven solutions for leading organizations. Godwin Nse Ebong is a lifelong learner, constantly seeking new challenges and opportunities to expand his knowledge and expertise.

Sunday Adeola Ajagbe is a Ph.D. candidate in the Computer Engineering Department at the Ladoke Akintola University of Technology (LAUTECH), Ogbomoso, Nigeria. He is also a lecturer at First Technical University, Ibadan, Nigeria. He obtained MSc and BSc in Information Technology and Communication Technology respectively at the National Open University of Nigeria (NOUN), and he also has PGD in Electronics and Electrical Engineering at LAUTECH. He has earlier obtained HND and ND in Electrical and Electronics Engineering at The Polytechnic, Ibadan, Ibadan, Nigeria. His specialization includes Artificial Intelligence (AI), Natural language processing (NLP), Information Security, Data Science, the Internet of Things (IoT), Biomedical Engineering, and Smart solutions.

Authors’ Contributions

Olufemi S. Ojo participated in the conceptualization, validation, and supervision.

Mayowa O. Oyediran participated in the conceptualization, and methodology.

Babatunde J. Bamgbade participated in the resources, software, project administration, and writing of the original draft.

Emmanuel A. Adeniyi participated in the methodology, resources, and writing of the original draft.

Godwin Nse Ebong participated in the resources, editing, and review.

Sunday Adeola Ajagbe participated in the editing, review, visualization, and supervision.

Competing Interests

The authors declare that they have no competing interests.

Funding

No funding was received for this project.